HapticNav

Empowering the Visually Impaired through Haptic Navigation

Project Highlight.

HapticNav, developed by WearWorks, stands out as one of the world's first haptic navigation apps, specifically designed for the blind and visually impaired. It uniquely guides users solely through voice-over instructions and intuitive haptic vibrations. In my role as the lead designer, I've focused on enhancing user-friendliness, ensuring seamless functionality across different devices, and continuously refining the app based on real-world feedback to maintain its position as a pioneering solution in accessibility technology.

Timeline: 2021 - Present

My Role: Competitor Analysis, User Interview, Questionnaire, Persona, Storyboard.

Team: WearWorks Design Team

Tools: Figma , Adobe XD, Sketch, Maze

1

Discover Desktop Research User Interview

2

Define Persona User Journey Map

3

Ideate Brianstorm Value Proposition

4

Prototype Paper Wireframe Lo-fi Prototype

5

Test Usability Test Heuristics Evaluation

6

Finalize Hi-fi Prototype Success Metrics

Overview

The Story

HapticNav is a family of products that includes mobile apps (iOS & Android). The product began as the Wayband design, initially conceived by WearWorks' CEO, Kevin, and Keth. The Wayband is a wearable device that utilizes haptic vibrations to aid individuals with visual impairment and parietal impairment in navigating the digital world. I joined the team in 2021 with a mission to enhance our products for our diverse global user base and to envision an accessible mapping experience for the blind and visually impaired individuals of the future.

In comparison to my previous role as a UX intern at a different company, WearWorks HapticNav was significantly smaller and more agile. Initially, I was the sole UX designer on the team, and I greatly appreciated this startup-like culture. Organization was a key aspect I valued. Implementing simple practices such as establishing a centralized file system, sending out weekly email updates, setting quarterly objectives, and developing a project allocation process not only increased the visibility and collaboration of UX contributions but also laid a strong foundation that scaled effectively as my team expanded.

How can we assist individuals who are blind or visually impaired in navigating through an app that utilizes haptic vibrations for orientation?

Focus on User research

In a recent study in 2021 there are globally there are 43 million people living with blindness and 295 million people living with moderate-to-severe visual impairment. Building an accessible navigation tool for the blind and visually impaired is an essential stepping tool for a more accessible society. In addition to being an efficient utility app, we wanted to better understand our users' current workflows and pain points to provide better experiences for the most important use cases.

For this, we needed to do user research, but in the beginning, we didn't have a user researcher on the team, so I had to do it myself. After digging through past studies and organizing it in a research archive, I created a UX research program focused on both international and US market where HapticNav had a large and growing user base. The program offered a variety of research methods; Google Surveys allowed us to ask anything from our users in a few clicks, in-house studies helped us settle minor UI questions quickly, vendor-driven usability studies gave us deeper insights internationally, and annual immersive discovery trips gave us valuable first-hand knowledge of our users globally. Using this program we conducted more user research studies in my first year at WearWorks HapticNav Team than in all the 5 previous years combined.

Improving consistency

As our platforms evolved, inconsistencies emerged over time due to various contributors. To address this, I've prioritized consistency as a core principle. Now, every new feature is conceptualized and designed with a unified approach across all our platforms.

Transforming the Brand's Design Language

I focus on crafting and enhancing innovative design languages for digital product services, customized for iOS and Android platforms. Elevating the digital experience remains my central goal.

How can we create a navigation product that caters to both VoiceOver and visual users? What strategies can we employ to optimize interaction layers, simplifying the experience for VoiceOver users?

IDEATE

BRAINSTROM

Based on the above discoveries, we brainstormed some potential features that can solve the pain points.

A significant challenge is the user's inability to save locations using VoiceOver in various navigation apps available on the market.

What is VoiceOver for visually impaired?

Hear what’s happening on your screen.

VoiceOver describes exactly what’s on your screen, even in braille. You can use a refreshable braille display connected via Bluetooth to your Apple device or enter braille directly on the touchscreen using Braille Screen Input.

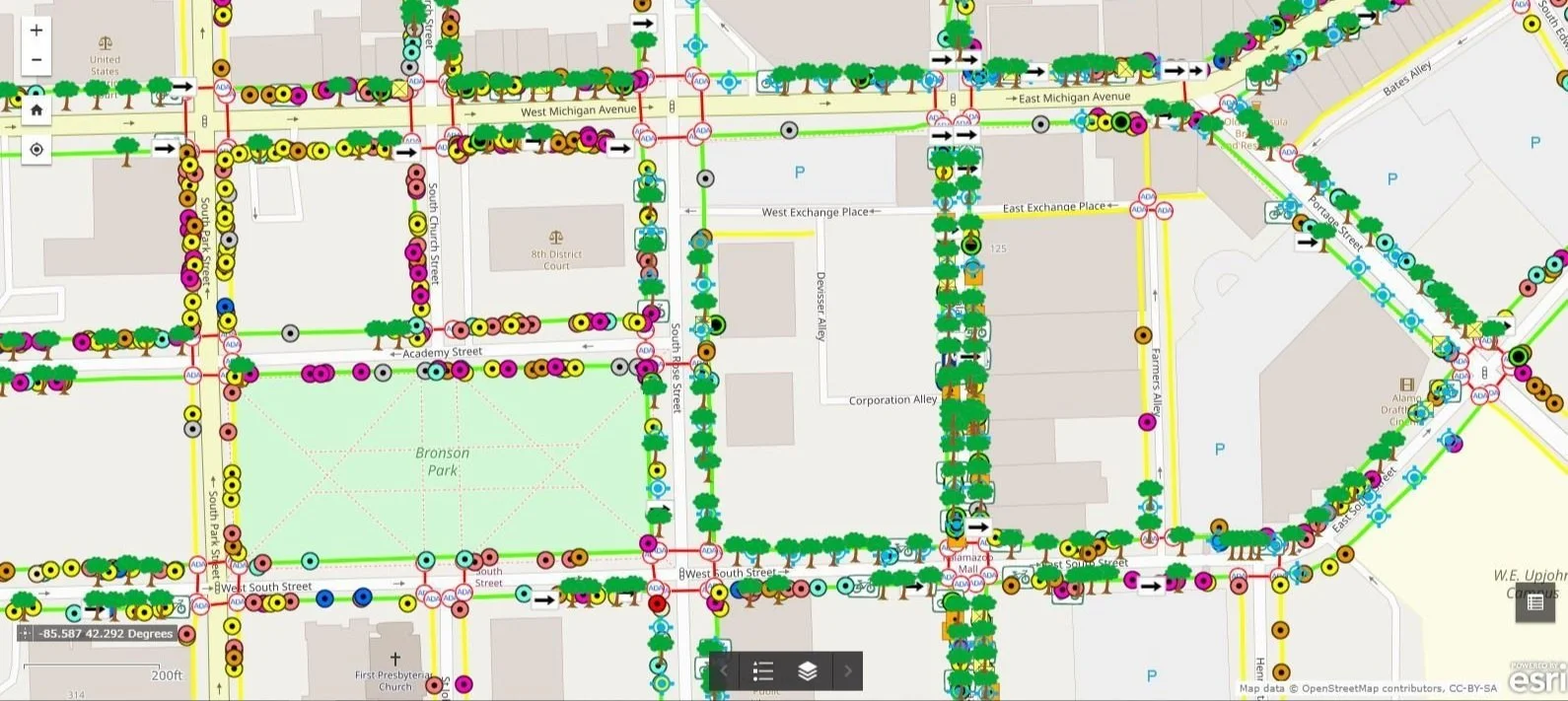

User Road Map & System Design

As the lead designer responsible for the app's design and layout, I have overseen the entire system's conceptualization and development. Over the course of several months, extensive research has not only led to a transformation but also an expansion of the overall user flow within the app.

Discover & Define

Research Partner

We intensify our research each passing year, uncovering numerous problems that need solving. We've found ways to address these issues, specifically focusing on improving the user experience. This has led to the creation of the first fully usable voice-over navigation product

Joey Down

Simon Wheatcroft

Marcus Engel

Markeith Price

DEFINE

Primary User Research

Survey & User Interview

We partnered with Lighthouse Manhattan to conduct a comprehensive survey, engaging over 100 individuals with varying degrees of visual impairment. Our aim was to gain deeper insights into how these users navigate cityscapes from one location to another. This survey focused on understanding the challenges they face with current navigational tools, specifically in terms of haptic vibration feedback and voice-over guidance. Our objective was to identify areas for improvement in HapticNav, ensuring it effectively meets the unique needs of low vision users in urban environments.

User Journey Map

Pain Point

1 - Low-vision users struggle to navigate digital interfaces due to a lack of available assistance tools in the market.

2 -Users encounter difficulties saving locations using apps in the market solely relying on VoiceOver.

3 -The user is having difficulty sharing their location with a family member.

Function Innovation 1 :

Haptic Compass

We prototyped it this way to achieve a minimalistic design that avoids clutter, thereby reducing cognitive load for the user. The haptic feedback mechanism is central to the design, as it provides a direct, physical sense of direction, which is far more intuitive for someone who cannot rely on visual information. The inclusion of a customizable 'Haptic Delay' slider allows users to adjust how frequently they receive these physical cues, giving them control over the navigation experience.

We prototyped it this way to achieve a minimalistic design that avoids clutter, thereby reducing cognitive load for the user. The haptic feedback mechanism is central to the design, as it provides a direct, physical sense of direction, which is far more intuitive for someone who cannot rely on visual information. The inclusion of a customizable 'Haptic Delay' slider allows users to adjust how frequently they receive these physical cues, giving them control over the navigation experience.

Function Innovation 2 :

Turn by Turn Navigation

The turn-by-turn navigation feature in HapticNav is particularly beneficial for blind and visually impaired users, especially when navigating sidewalks. This functionality offers precise, step-by-step directions, allowing users to confidently and safely move through urban environments. For individuals with visual impairments, traditional visual cues in navigation apps are not usable, making auditory and tactile feedback essential.

HapticNav's special blend of voice instructions and haptic feedback (vibrations) provides an intuitive way to understand directions without the need for visual input. This is crucial on sidewalks, where attention to the surrounding environment is key, and the tactile sensations offer guidance without overwhelming the user with audio information. This approach enables visually impaired individuals to navigate independently, reducing reliance on others and enhancing their freedom and mobility in daily life.

How Might We

How might we create a map feature that lets users pin their location using just voice commands?

Case Research 1 :

Pin Location

One of our key research discoveries was this: eager to save location with ease even with limited visual access.

Crafting the inaugural mapping feature that enables users to effortlessly mark their location with simple voice commands.

Currently, there is no software available to assist blind or visually impaired users in pinning their current or desired locations on a map. I have taken the initiative to research and develop one of the very first location-pinning functions based on voice-over technology.

VALUE PROPOSITION

HapticNav introduces tactile feedback, complementing VoiceOver to simplify digital interface navigation for low-vision users.

Enhanced 'Save Location' feature in HapticNav, designed for easy use with VoiceOver, streamlines the process for users.

HapticNav's intuitive 'Share Location' option allows users to easily communicate their whereabouts to family members.

Prototype

Currently, there is no software available to assist blind or visually impaired users in pinning their current or desired locations on a map. I have taken the initiative to research and develop one of the very first location-pinning functions based on voice-over technology.

We prototyped it this way to achieve a minimalistic design that avoids clutter, thereby reducing cognitive load for the user. The haptic feedback mechanism is central to the design, as it provides a direct, physical sense of direction, which is far more intuitive for someone who cannot rely on visual information. The inclusion of a customizable 'Haptic Delay' slider allows users to adjust how frequently they receive these physical cues, giving them control over the navigation experience.

Solution

Pin Location

'Easy Pin' feature enabled by voice commands for hands-free location marking.

Context-sensitive activation, where the pinning button becomes available only when voice-over is in use.

Tailored for the visually impaired, filling a gap in the market with one of the first voice-over-based location-pinning functions.

How Might We

How can we leverage VoiceOver to facilitate location-saving in our hapticNav system?

Case study 2 :

Share Location with Family member

Enable both visually impaired and sighted users to easily share their location with family members with a single button press

Our research findings have unveiled a significant user demand for a streamlined process allowing them to effortlessly share their location with family members. The desire is to establish an efficient and user-friendly solution that facilitates easy location sharing, catering to both visually impaired and sighted users alike. This understanding emphasizes the importance of developing a feature that accommodates various user needs and abilities, ensuring a seamless and inclusive experience for all.

Prototype

Voice over to share location with family:

Voice-Enabled Sharing: Users can employ voice commands to activate location sharing, ensuring that the visually impaired can effortlessly share their current or a saved location without sighted assistance.

Universal Design: The solution is crafted to be user-friendly for all, regardless of visual ability, allowing for a seamless experience when sharing location details with family members.

Inclusive and Empathetic: By considering the various needs and abilities of our users, we've ensured that our feature promotes inclusivity, enabling blind, visually impaired, and sighted users alike to stay connected with ease.

Case Study - User Feedback

Voice-enabled sharing was highly praised for its simplicity and ease of use.

The feature's universal design was commended for inclusivity, making users feel more connected.

Feedback from Lighthouse Manhattan has been crucial in refining and improving the solution.

Case Study - User Feedback

Users appreciated the seamless integration of voice commands for location sharing.

The inclusive design received positive remarks for empowering visually impaired users with greater independence.

Collaboration with Lighthouse Manhattan provided actionable insights to further enhance user experience.

Final Design & Solution

Utilizing HapticCompass for a simplified visual navigation experience through haptic vibrations.

The user can switch between the HapticCompass and a traditional map for navigation, both utilizing HapticVibration feedback."

Navigate using a turn-by-turn route.

By employing turn-by-turn navigation, users receive step-by-step guidance, ensuring they are directed to the correct side of the sidewalk throughout their journey. This feature serves as a comprehensive aid, enhancing safety and convenience for pedestrians as they navigate their route.

Utilizing a dropped pin to indicate the user's location.

"Develop a voice-controlled system that enables users to effortlessly drop a pin with a single action."

We're introducing vibration haptics on wearable devices, starting with the Apple Watch. This feature will use subtle vibrations to guide users through tactile feedback, offering a discreet and intuitive navigation aid for the visually impaired.

Reflection

In my role leading a small design team, our primary focus is on researching and improving the user experience (UX) for our company. It's an exciting opportunity, but I've encountered some challenges, especially when it comes to effectively communicating our research findings and goals to team members who are new to this kind of work.

One of the trickiest aspects is managing our objectives across different project phases while ensuring that we uphold consistent quality and delve deeply into our research, all with the constraints of a small team. It's a delicate balancing act—maintaining high standards while navigating the limitations inherent in our team size.

To address this, I've been working on setting up a systematic approach. This involves establishing a feedback loop where we actively gather insights and input from individuals with low vision. Their perspectives are invaluable in helping us create a more inclusive and accessible user experience.

Additionally, I'm keen on staying vigilant about our users' engagement levels and monitoring the influx of new users. Keeping track of these metrics helps us gauge the effectiveness of our design choices and identify areas where we might need to pivot or make improvements.

It's an ongoing process of learning and adaptation, but I'm committed to refining our approach to ensure that our design decisions are not only well-informed by thorough research but also responsive to the diverse needs of our user base.

Leading a small design team for the first time, my focus is on improving our company's user experience (UX). Communicating our research effectively to newcomers is a challenge. Balancing quality and deep research with our small team size is tough. I'm setting up a system to gather feedback from those with low vision while tracking user engagement and new user rates to enhance our approach continually.